Lambda Architecture with Apache Spark

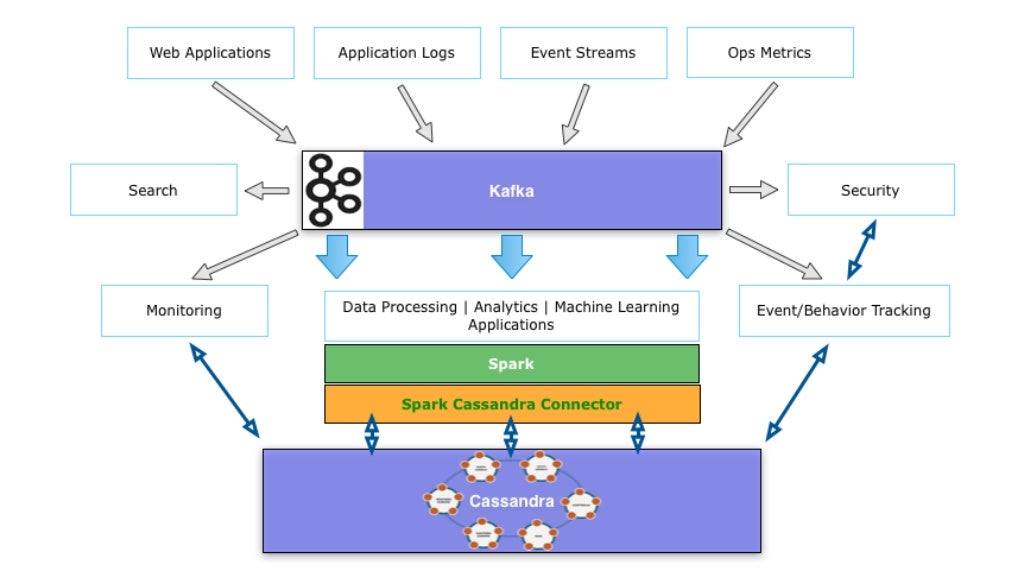

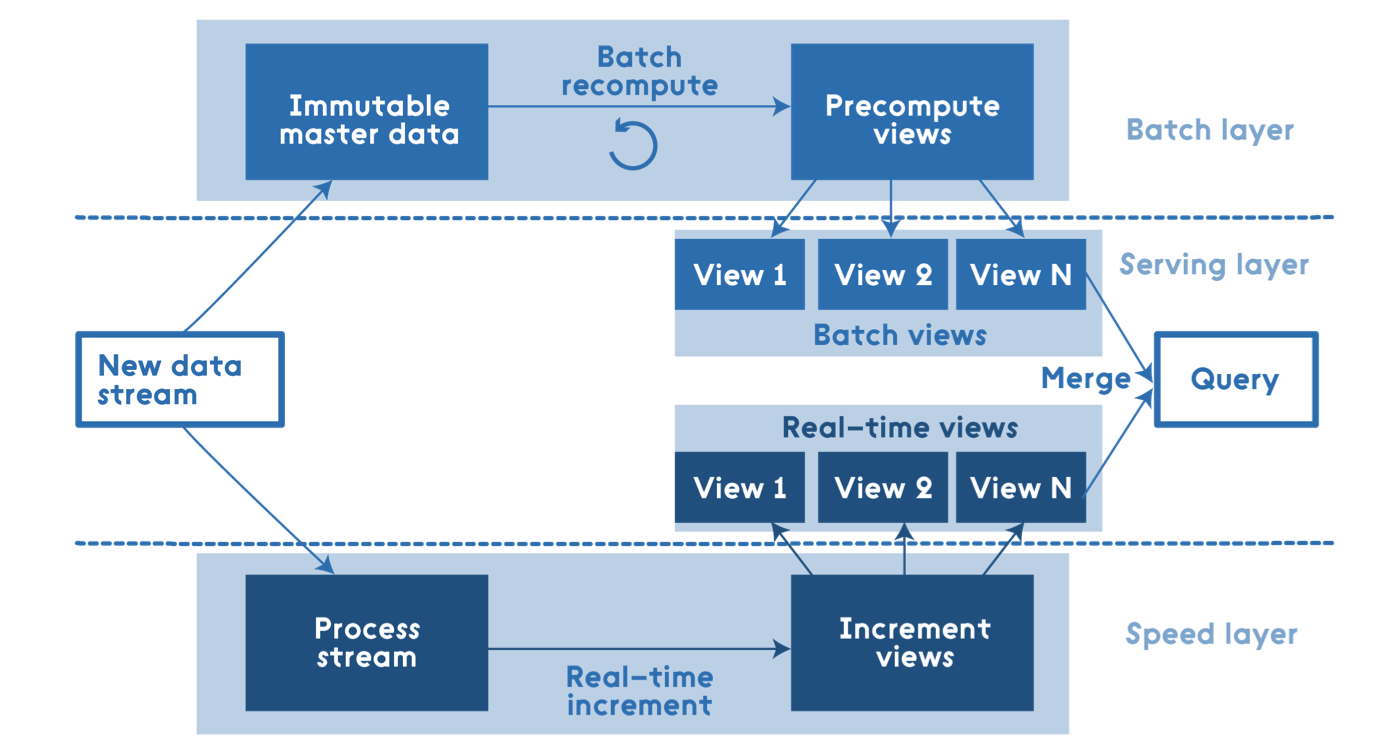

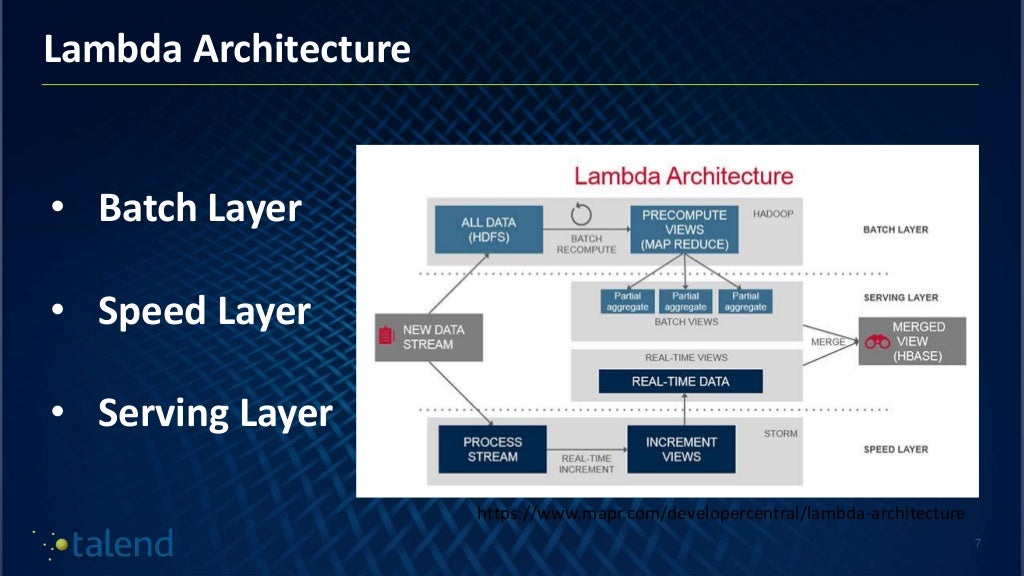

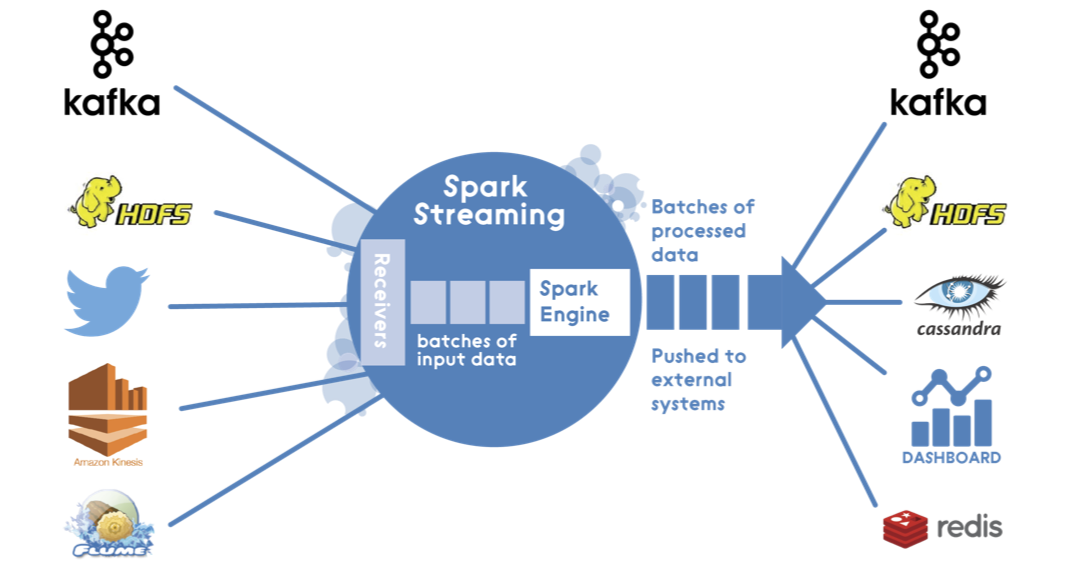

Lambda architecture is a data-processing architecture designed to handle massive quantities of data by taking advantage of both batch and stream-processing methods.. Apache Storm, SQLstream, Apache Samza, Apache Spark, Azure Stream Analytics. Output is typically stored on fast NoSQL databases., or as a commit log. Serving layer Diagram.

Lambda architecture with Azure Cosmos DB and Apache Spark Microsoft Docs

Lamda Architecture. We have been running a Lambda architecture with Spark for more than 2 years in production now. The Lambda architecture provides a robust system that is fault-tolerant against.

Lambda Architecture with Apache Spark DZone

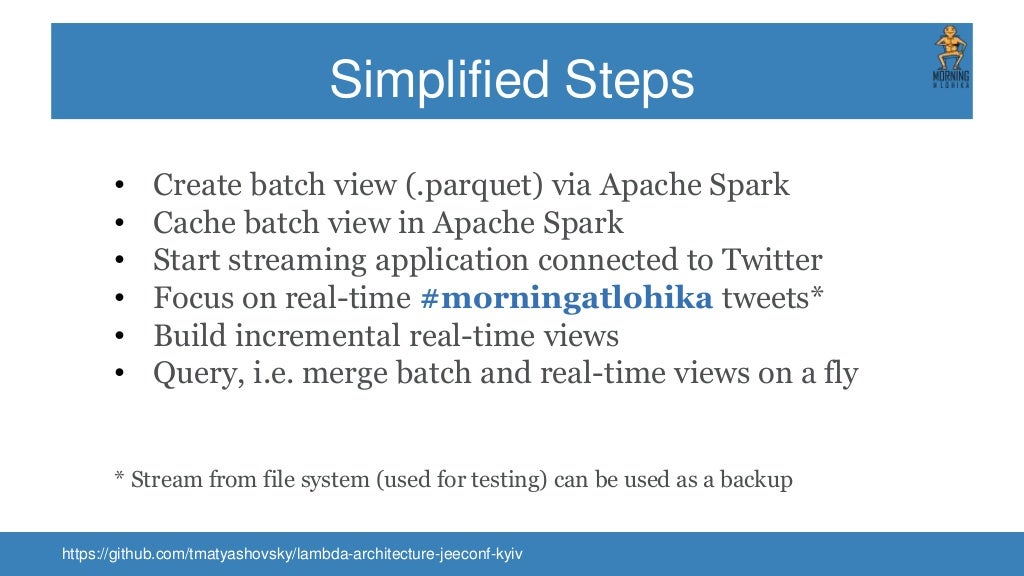

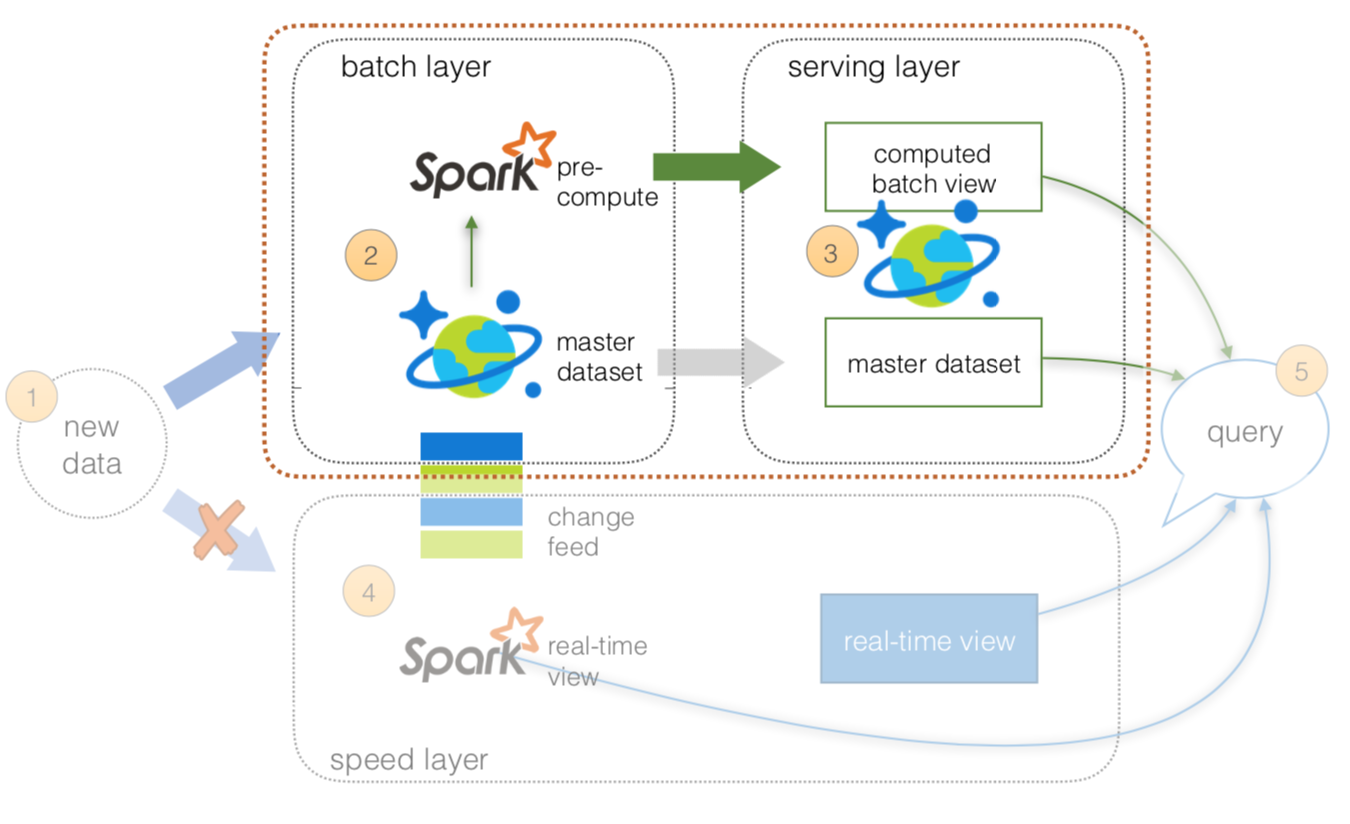

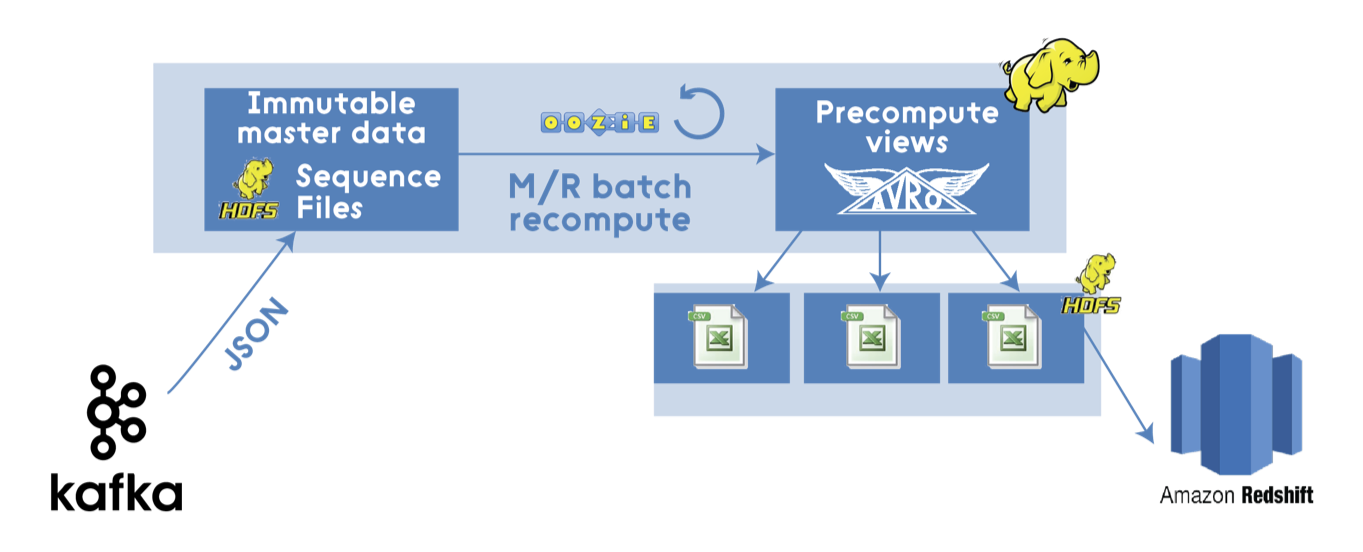

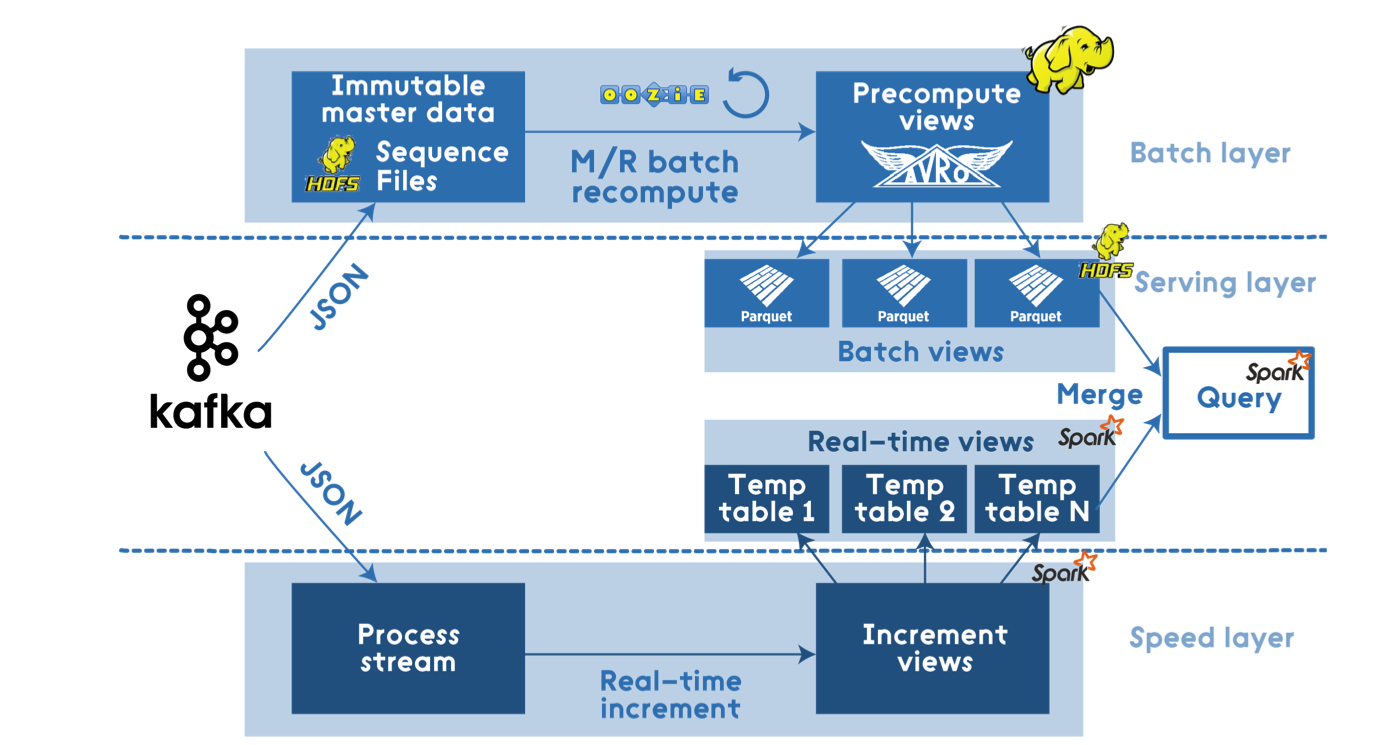

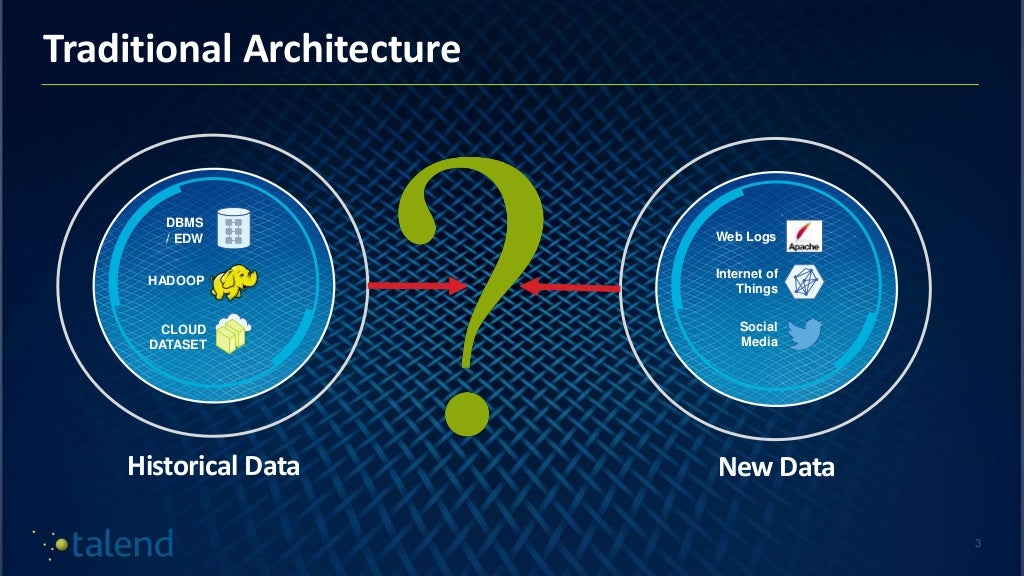

Lambda architecture consists of an ingestion layer, a batch layer, a speed layer (or stream layer), and a serving layer. Batch layer: The batch processing layer handles large volumes of historical data and stores the results in a centralized data store, such as a data warehouse or distributed file system.This layer uses frameworks like Hadoop or Spark for efficient information processing.

What is Apache Spark BigData_Spark_Tutorial

Lambda architecture is a data-processing design pattern to handle massive quantities of data. Spark Streaming and Spark SQL on top of an Amazon EMR cluster are widely used. Amazon Simple Storage Service (Amazon S3) forms the backbone of such architectures providing the

Lambda Architecture with Spark, Spark Streaming, Kafka, Cassandra, Ak…

Lambda Architecture with Apache Spark Michael Hausenblas, Chief Data Engineer MapR Big Data Beers, Berlin, 2014-07-24. View Slide.

Lambda Architecture with Apache Spark DZone

The given figure depicts the Lambda architecture as a combination of batch processing and. Get Learning Spark SQL now with the O'Reilly learning platform. O'Reilly members experience books, live events, courses curated by job role, and more from O'Reilly and nearly 200 top publishers.

Lambda architecture with Spark

Spark on AWS Lambda (SoAL) is a framework that runs Apache Spark workloads on AWS Lambda. It's designed for both batch and event-based workloads, handling data payload sizes from 10 KB to 400 MB. This post highlights the SoAL architecture, provides infrastructure as code (IaC), offers step-by-step instructions for setting up the SoAL framework in your AWS account, and outlines SoAL.

Lambda Architecture with Apache Spark

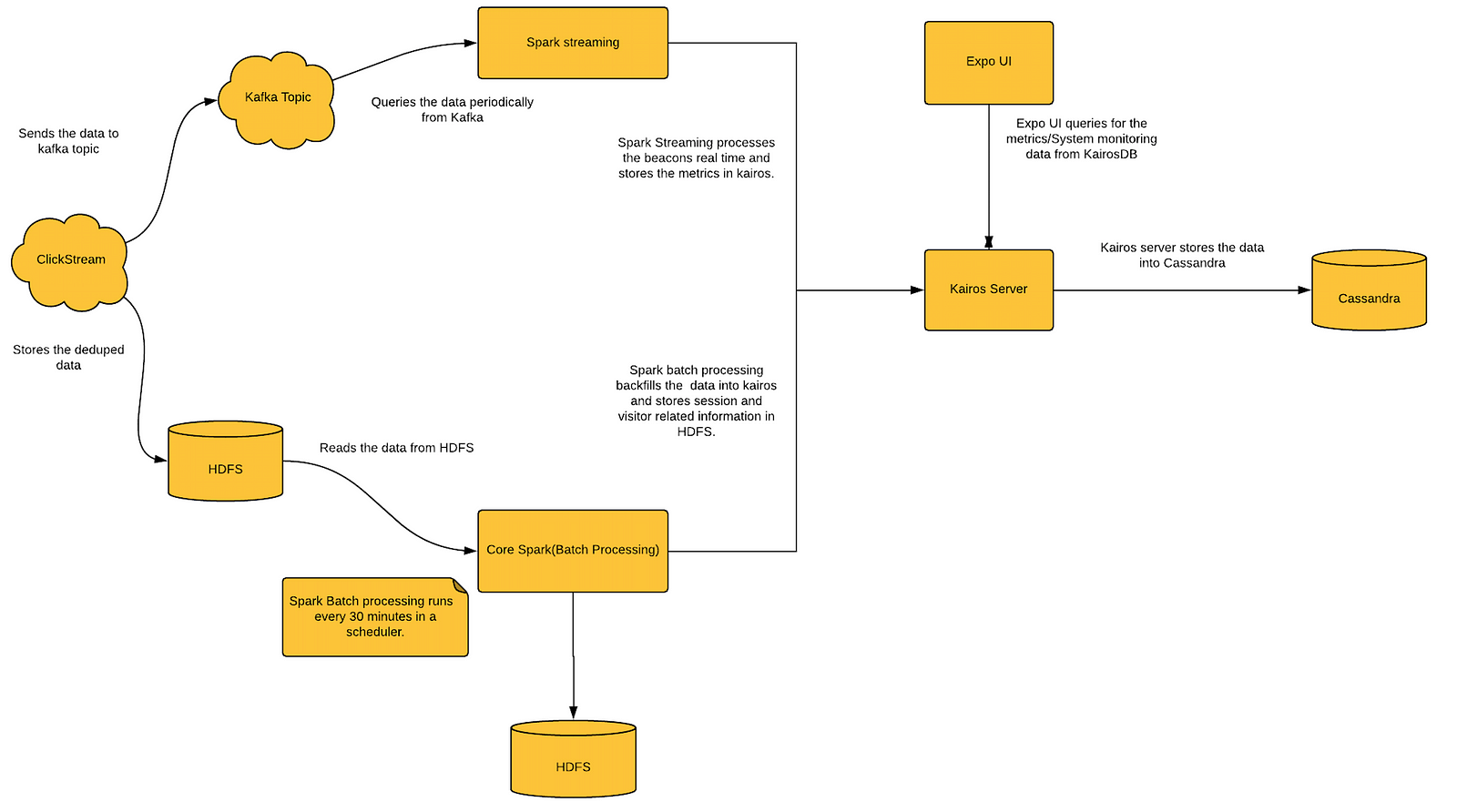

7. I'm trying to implement a Lambda Architecture using the following tools: Apache Kafka to receive all the datapoints, Spark for batch processing (Big Data), Spark Streaming for real time (Fast Data) and Cassandra to store the results. Also, all the datapoints I receive are related to a user session, and therefore, for the batch processing I'm.

How we built a data pipeline with Lambda Architecture using Spark/Spark Streaming

Spark streaming is essentially a sequence of small batch processes that can reach latency as low as one second.Trident is a high-level abstraction on top of Storm that can process streams as small.

Lambda architecture with Spark

Lambda architecture is a way of processing massive quantities of data (i.e. "Big Data") that provides access to batch-processing and stream-processing methods with a hybrid approach. Lambda architecture is used to solve the problem of computing arbitrary functions. The lambda architecture itself is composed of 3 layers:

Lambda Architecture with Apache Spark

Apache Spark is used for data streaming, graph processing, and data batch process.. Lambda architecture is complex infrastructure as it has many layers involved. Although the offline layer and the real-time stream face different scenarios, their internal processing logic is the same, so there are many duplicate modules and require different.

Learn Building Lambda Architecture with the Spark Streaming

Building lambda Architecture( Batch an dspeed layer) in order to setup a real-time system that can handle real-time data at scale with robustness and fault-tolerance as first-class citizens using.

Lambda Architecture with Spark

Spark - One Stop Solution for Lambda Architecture. Apache Spark scores quite well as far as the non-functional requirements of batch and speed layers are concerned: Scalability: Spark the cluster.

Spark Streaming Lambda Architecture The Architect

But even in this scenario there is a place for Apache Spark in Kappa Architecture too, for instance for a stream processing system: Lambda architecture Architecture Apache Spark Data processing.

Lambda architecture with Spark

Applying the Lambda Architecture with Spark, Kafka, and Cassandra. by Ahmad Alkilani. This course introduces how to build robust, scalable, real-time big data systems using a variety of Apache Spark's APIs, including the Streaming, DataFrame, SQL, and DataSources APIs, integrated with Apache Kafka, HDFS and Apache Cassandra. Preview this course.

Lambda Architecture with Apache Spark DZone Big Data

Enroll for Free Demo on Apache Spark Training! The solution to the one hour delay problem is a feature known as lambda architecture. The feature puts together the real-time and batch components. You would need the 2 components due to the fact that real time data arrival always contains fundamental problems.